A speaker made a particular discovery: the photo she shared in anticipation of a future event in which she had to speak was reworked by artificial intelligence. It appears that she was slightly sexualized in the process. An anecdote which indirectly raises the question of how generative AIs are trained.

Will it also be necessary to build artificial intelligences far from humans, like what Internet users are trying to do with the web? This is one of the questions that might arise after reading the story shared on X (ex-Twitter) by Elizabeth Laraki, an American speaker specializing in topics related to AI and user experience (UX). .

In his message published on October 15 on the social network, she recounts a surprising experience that she was confronted with. The photo she provided for the upcoming promotion of a conference in which she is to attend was reworked by a generative artificial intelligence, and also slightly sexualized in the process.

A more open blouse

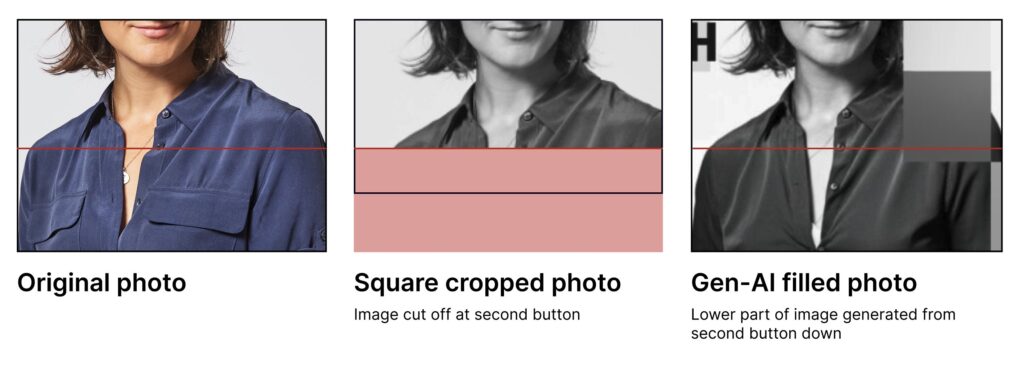

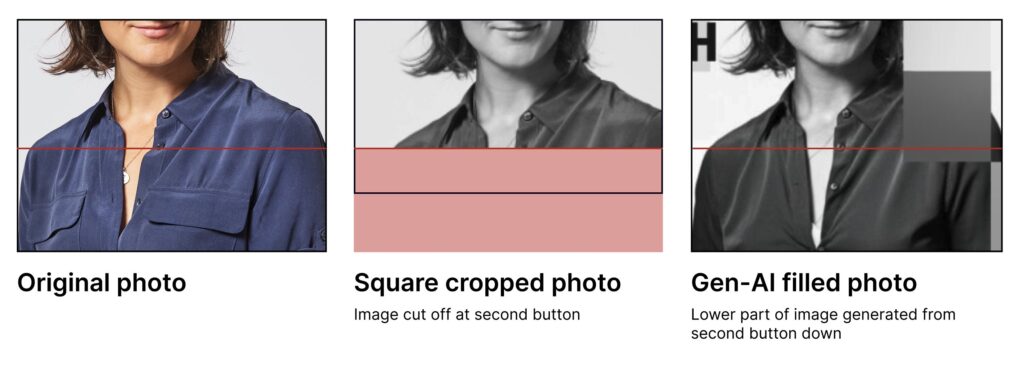

In the initial photo, the speaker wears a blue blouse half-opened at the collar, which allows a pendant to be highlighted. But the image was reworked by the AI (the type of service used was not mentioned), the blouse was unbuttoned further, in order to slightly reveal a piece of bra, or underwear.

A decision on the part of the conference organization to play on the sex appeal of the participants? It appears, according to Elizabeth Laraki’s explanation, that this is rather an involuntary result, the consequence of a series of blunders in the process intended to promote the event. A sequence that she recounts in her tweet.

According to the organizer’s investigations, relayed by Elizabeth Laraki, the person responsible for communication on their social networks ” used a cropped image (from the speaker, Editor’s note) from their website. She needed it to be more vertical, so she used an image extender tool to make it taller ”, she said.

Generative artificial intelligence then “ invented the lower part of the image (in which she believes that women’s shirts should be unbuttoned more, with some tension at the buttons, and reveal a small hint of something underneath) “. Underwear that doesn’t exist in the real photo.

In the false image it gives, the presence of the bra is discreet, enough in any case to manage to deceive the vigilance of the person who was responsible for adjusting the photo for social networks. That being said, the good news is that things quickly returned to normal: the false photo was deleted and an apology was made.

The question of training data

This story, which is an anecdote, however raises a technical problem. Any generative artificial intelligence is built based on the training data given to it. To train an AI to recognize a cat, the common method in deep learning (deep learning) involves exposing the model to other cat images.

Therefore, the case reported by Elizabeth Laraki leads us to question the corpus of data which made it possible to form the model used by the person; more broadly, this also leads to the question of how women are represented, or represent themselves, on the Internet, if the service that trained the model freely took photos online.

These are far from new issues. They have also been asked in other circumstances. In 2018, for example, an MIT study highlighted that some algorithms were overtrained on photos of white men, making them less effective for people with different skin colors.

Depending on the content of the corpus used to train an AI, there is a risk of reproducing, via the system, biases and stereotypes. A subject which is not completely resolved at this stage, as evidenced by this story, and which also concerns the text: in 2023, the way in which ChatGPT was formed had been at the heart of a political debate in France.

Source: www.numerama.com