French civil society organizations have sued the branch of the French social security system responsible for families, the public body Caisse Nationale des Allocations Familiales (CNAF), which distributes housing allowances through a network of 101 family support offices (CAF) across France using a scoring algorithm. “These risk assessment systems may pose an unacceptable risk under the Artificial Intelligence Act,” said researcher Soizic Pénicaud.

Fifteen French NGOs sued him the CNAF at the French Council of State for using a risk scoring algorithm that affects nearly half of the French population. Legal action was initiated after the Court of Justice of the European Union (CJEU) he saidthat decision-making using scoring algorithms using personal data is illegal under the EU Data Protection Regulation (GDPR). The civil organizations called on the Council of State to refer the case to the European Court of Justice for a preliminary ruling. The case can take two to five years to be adjudicated, depending on how the referral is handled.

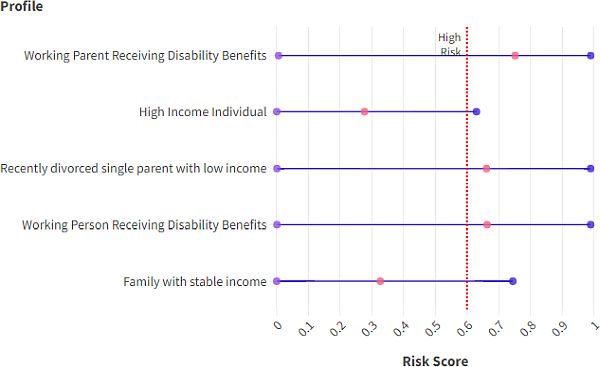

“This algorithm mathematically reflects the discrimination that already exists in our society. It is neither neutral nor objective,” said Marion Ogier, lawyer of the Human Rights League, at a press conference in Paris. Since 2010, the CNAF has used an algorithm to select beneficiaries to review their benefits. These credit checks focus on cases deemed “higher risk” based on the beneficiary’s profile and situation.

However, a local study published in December 2023 criticized these checks as they were not truly random. Seventy percent of the 128,000 checks performed in 2021 came from scoring algorithms – it turned out from the CNAF 2022 report. “CNAF’s algorithm is only part of the system. State pension systems, health insurance and the employment service also use similar algorithms,” added Ogier. The software is designed to identify only “significant and repeated overpayments” by those most prone to making mistakes in their returns. among beneficiaries, said Nicolas Grivel, director general of the CNAF. “If a court were to make a decision that required an adjustment of the system, the CNAF would of course comply with it,” he said.

The claim submitted to the Council of State by 15 NGOs revolves around two types of arguments: data protection and discrimination. La Quadrature du Net, Amnesty International France, the Abbé Pierre Foundation and the Human Rights League, among others, claim that the CNAF violated the GDPR because the use of the algorithm does not respect the principle of proportionality. “Almost half of the French population is controlled,” said Bastien Le Querrec, a legal expert at La Quadrature du Net (LQDN).

According to the non-governmental organizations, the CNAF algorithm also indirectly discriminates against certain persons, which violates the EU Directive on the principle of equal treatment and the EU Charter of Fundamental Rights. According to them, the algorithm targets poorer people receiving social benefits because the benefit rules are complicated, which increases the likelihood of errors. “The algorithm perpetuates the political stereotype of ‘welfare-dependent’ people and discriminates through its operating methods,” said Le Querrec. The CNAF algorithm, for example, discriminates against women, who make up 95% of single-parent families in the first 18 months after separation, he said.

In their legal submission, the NGOs write that CNAF’s algorithm triggers 69% of checks, but these account for 49% of all recovered erroneous payments. In contrast, credit checks triggered by other means account for 31% of all checks, but 51% of recovered erroneous payments. NGOs therefore believe that scoring algorithms disrupt the fair treatment of public services by over-scrutinizing certain groups for contested benefits.

“A scoring algorithm is more than a mere sum of lines of code; it is always politically motivated and often supports repressive austerity policies that harm the most vulnerable,” said Soizic Pénicaud, an independent researcher at Lighthouse Reports. CNAF examination worked and is a digital public policy lecturer at Sciences Po. France is not alone in using such algorithms. Similar algorithms are used in other EU countries, including the Netherlands and Denmark, added Pénicaud. Germany even wants to include it in a legal framework.

The French case specifically points to the targeted use of such algorithms by the authorities. The Dutch government resigned in January 2021 after scoring algorithms wrongly accused thousands of parents of cheating. The scoring algorithm for the public benefits administration in Denmark is Lighthouse Reports and WIRED March 2023 meaning came under fire after allegedly using data based on nationality – similar to ethnic profiling – to catch fraudsters applying for aid.

Pénicaud proposes a reversal of the burden of proof, according to which “EU countries must prove that their systems are not harmful and non-discriminatory before implementation.” The Internal Market Committee of the European Parliament (ITRE) in October 2023 had the same idea formulated itwhen it adopted its own-initiative report on the addictive nature of online services, which may feature in upcoming debates on the Digital Fairness Act. “These risk assessment systems are considered to pose an unacceptable risk under the Artificial Intelligence Act,” said Pénicaud.

Source: sg.hu