With DeepSeek-V3, China may have reason to worry OpenAI and major American groups. Its open source approach, in a sector increasingly dominated by private groups, allows it to move quickly, for much less money.

In just a few years, China has established itself as the leading country in terms of technological innovation. Long associated with an image of assembler and/or copier, the country of Huawei and Xiaomi is today the one that invests the most in research, with the hope of dethroning the United States. In addition to smartphones and computers, China is now capable of designing its own chips and has recently become a leader in the automotive sector.

Ahead of generative artificial intelligence thanks to companies like OpenAI, Google and Meta, the United States has long thought it would maintain its lead long enough to keep China at bay. Two years after the announcement of ChatGPT, China nevertheless claims to have dethroned them.

DeepSeek: the Chinese model factory

Founded in 2023, DeepSeek is going fast. In less than a year, it has managed to put several models considered effective online, in particular thanks to a very large financing effort from Chinese players. DeepSeek works on both classic LLMs (like GPT-4o), LLMs trained for tasks (coding, for example) and cutting-edge models capable of “thinking” (like o1 or o3).

Its latest feat is called DeepSeek-V3 and was announced on December 26, 2024. On the program for this new model:

- An interface 100% inspired by that of ChatGPT, with very similar animations. There’s even a Search button, with a built-in search engine.

- A clearly increasing writing speed (60 tokens/words per second).

- A completely open source model, with 671 billion parameters (that’s more than Meta Llama 3.1, the best American open source model).

- A training cost estimated at $5.5 million, which some estimate to be 10 times less than the Americans, with 14.8 trillion tokens analyzed for training.

- A significantly cheaper API, which could encourage many developers to use DeepSeek, if it is really effective, rather than GPT-4o or another American solution.

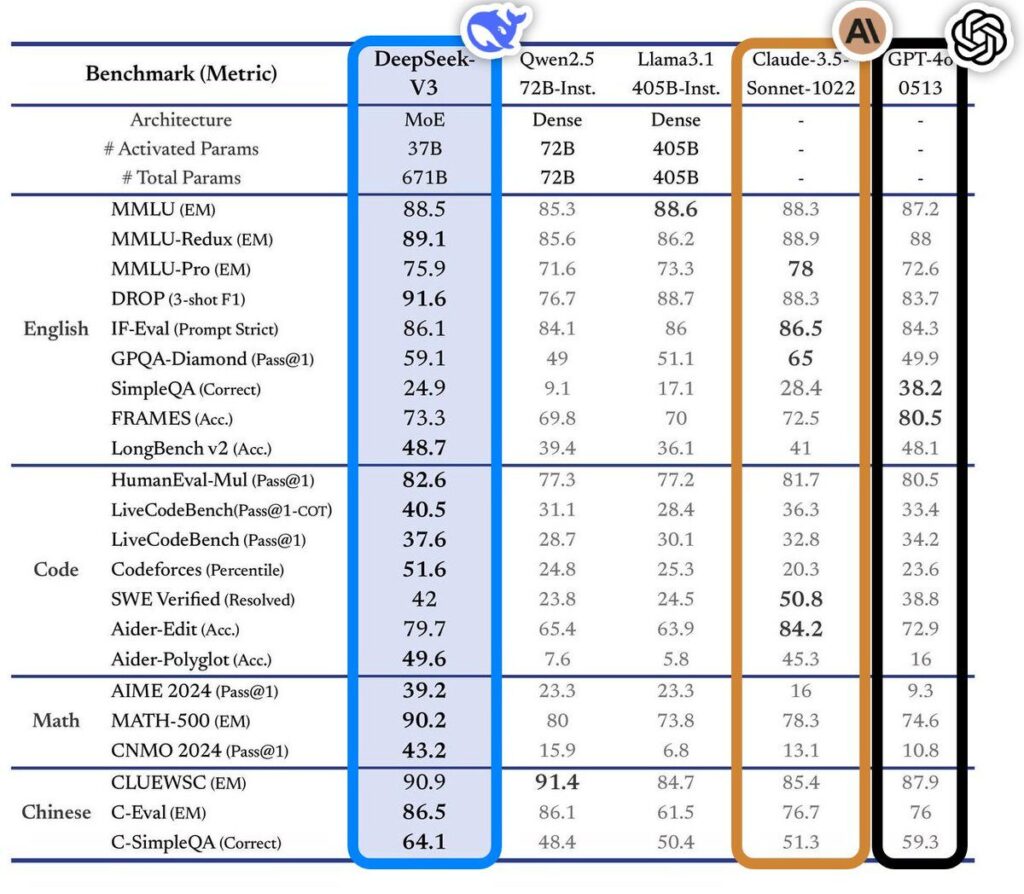

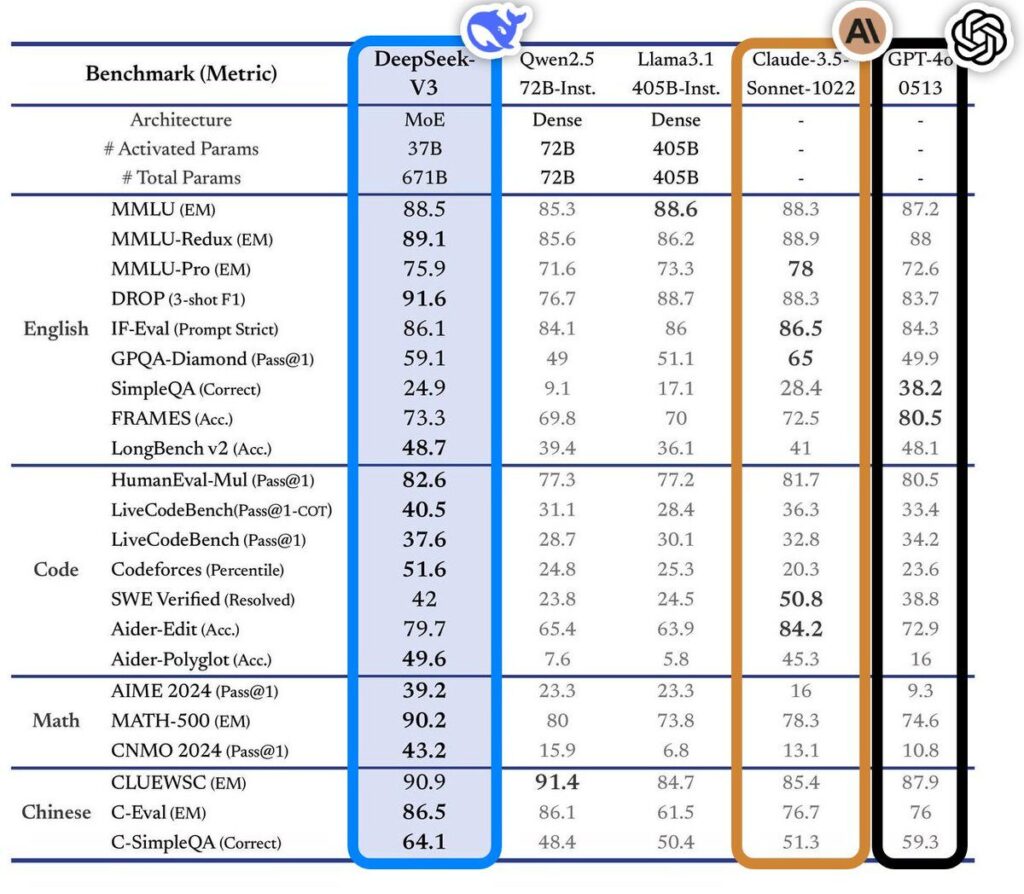

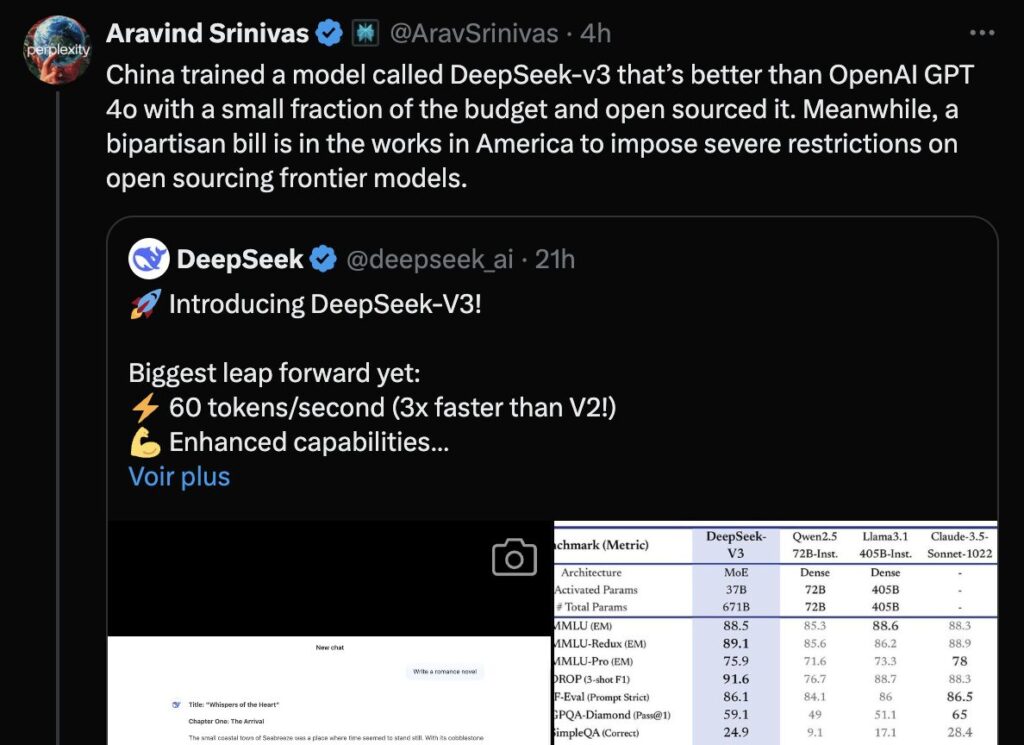

According to benchmarks published by DeepSeek, its V3 model is comparable to GPT-4o (OpenAI) and Claude-3.5-Sonnet (Anthropic), the two most popular LLMs in the United States. DeepSeek-V3 does even better with math and coding, while naturally excelling in Chinese. All this remains to be proven (early testers indicate that DeepSeek sometimes seems to get confused), but it is nevertheless very promising. Especially for such a low cost.

Should the US be worried about DeepSeek-V3?

In a few months and with relatively little money, the engineers recruited by China would therefore have succeeded in matching GPT-4o, the default model of ChatGPT. There are of course many advantages remaining in OpenAI (multimodality, voice mode, integration, generation of images and videos, etc.), but the feat remains impressive. DeepSeek is all the more successful in its communication coup as its latest announcement is causing a reaction in the United States, where the previous ones only interested a very informed public. The boss of Perplexity is even surprised by the American policy against open source, which would slow down researchers. In short, the United States has taken the bait and is worried about the Chinese rise.

Another strong point: DeepSeek-V3 is already available for free. Anyone can try it.

In addition to the exploits of DeepSeek, which wants to be China’s ChatGPT, China is funding other cutting-edge models. Among them, CogVideoX, HunyuanVideo (Tencent) and Kling to generate videos or Qwen at Alibaba. They all have one thing in common: open source. A different approach from the United States, which allows researchers to go faster, with much more data.

The only unknown: how will the United States of Donald Trump, who is well aware of this Chinese competition, react? A technological war like with Huawei is not impossible, to slow down Chinese research.

Source: www.numerama.com