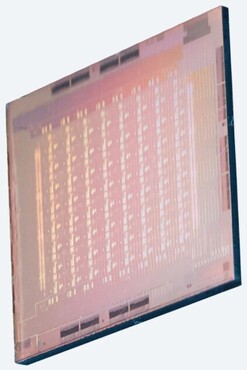

MTIA, i.e. Meta Training and Inference Accelerator, is the second generation development of the series.

Hirdetés

Meta has announced its latest AI accelerator, which can be used for both training and inference. The second-generation design of the MTIA, i.e. Meta Training and Inference Accelerator series, consumes significantly more than its predecessor, as its TDP per package has increased from 25 watts to 90 watts. At the same time, it is also much faster, as the chip itself is capable of 708 TFLOPS with 8-bit floating-point format, and 354 TFLOPS with 16-bit. These values are to be understood with sparsity, without it you can expect 354 and 177 TFLOPS, respectively.

The 64 processing blocks in the chip are also capable of classic vector operations, but the speed drops to 11.06, 5.53, and 2.76 TFLOPS during 8-, 16-, and 32-bit floating-point operations, respectively.

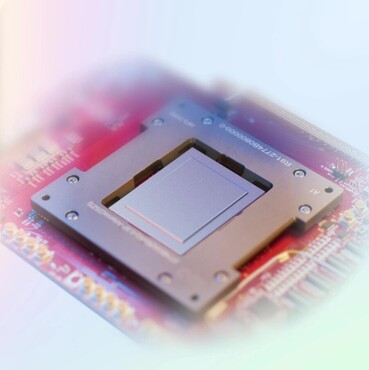

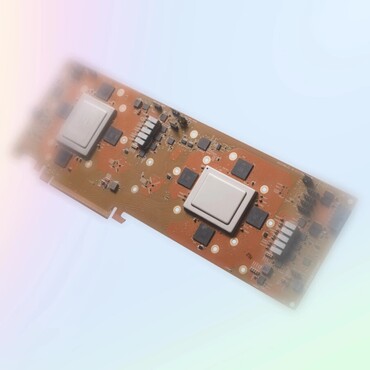

Meta places two new 5 nm TSMC node MTIA chips operating at 1.35 GHz on an expansion card that can be inserted into a PCI Express 5.0 interface, and the LPDDR5 standard on-board storage capacity is 128 GB. The company can use 72 pieces of the new accelerator in one rack, which means 144 pieces of chips, and this enables a computing performance of more than 100 PFLOPS.

Source: prohardver.hu