While I was working on a larger analysis of how CPU processors differ from GPUs, I noticed an interesting thing.

The Apple M4 chip contains 10 cores GPU, while even in basic video cards NVIDIA and AMD their more than a thousand.

And if you look at mid-level models, the difference is even stronger. Here’s an example.

IN M4 Pro 20 graphics cores are installed, and in the MacBook Pro this chip produces 13,577 points in 3D Mark.

IN NVIDIA RTX 4070 for laptops there are already 4608 cores, although it produces almost the same 12,388 points 3D Mark.

Apple did say that it updated its graphics architecture two years ago with the release of the M3 processors. But It can’t be that the graphic blocks of the two companies look like they come from different worlds. Or maybe?

Below I figured out how and how much the GPUs in Apple chips and others differ and found out why MacBooks are sold to us by indicating few cores, and RTX video cards by indicating a lot.

Apple has 100 times fewer cores for the same power

Let’s look at the performance level of graphics chips of different levels from Apple, AMD and NVIDIA, depending on the number of cores specified in the specifications.

As a benchmark, let’s take the test results in 3D Mark in multi-platform mode Steel Nomad Lite. It allows you to compare modern chips from different platforms in real gaming conditions.

Below is a comparison of GPUs of different levels from three manufacturers, the number of cores in them and benchmark results.

Entry level

︎ Apple M4 (22 W): 10 cores, 3946 points

︎ NVIDIA GTX 1060 6GB (120 W): 1280 cores, 4085 points

︎ AMD RX RX 6500 XT (107 W): 1024 cores, 4815 points

Intermediate level

︎ Apple M4 Pro (40 W): 20 cores, 7834 points

︎ NVIDIA RTX 2070 (175 W): 2304 cores, 8469 points

︎ AMD RX 7600 (165 W): 2048 cores, 10,121 points

Advanced level

︎ NVIDIA RTX 4070 Laptop (140 W): 4608 cores, 12,388 points

︎ Apple M2 Ultra (90 W): 76 cores, 12,952 points

︎ Apple M4 Max MacBook Pro (70 W): 40 cores, 13,577 points

Flagship

︎ AMD RX 7900 XTX (355 W): 6144 cores, 29,883 points

︎ NVIDIA RTX 4090 (450 W): 16384 cores, 42,169 points

Due to the fact that Apple’s top graphics chip is still the M2 Ultra, which does not support ray tracing, even its 76-core version scores lower in the Steel Nomad Lite test than the M4 Max, and lags significantly behind competitors’ top video cards.

It is important to pay attention to energy consumption. With the same performance in Apple GPUs, it is 5 times lower at the entry level and twice as low at the advanced level. And this takes into account energy consumption, including on the CPU.

That is, the math doesn’t add up at all. If you don’t understand how graphics chips work in computers, you might think that Apple has engineering geniuses who have created a graphics core that is up to 1,400 times more powerful than NVIDIA’s.

This is, of course, not true.

Let’s take a look at what GPUs are and how they count cores.

Let’s see how regular GPUs look and work

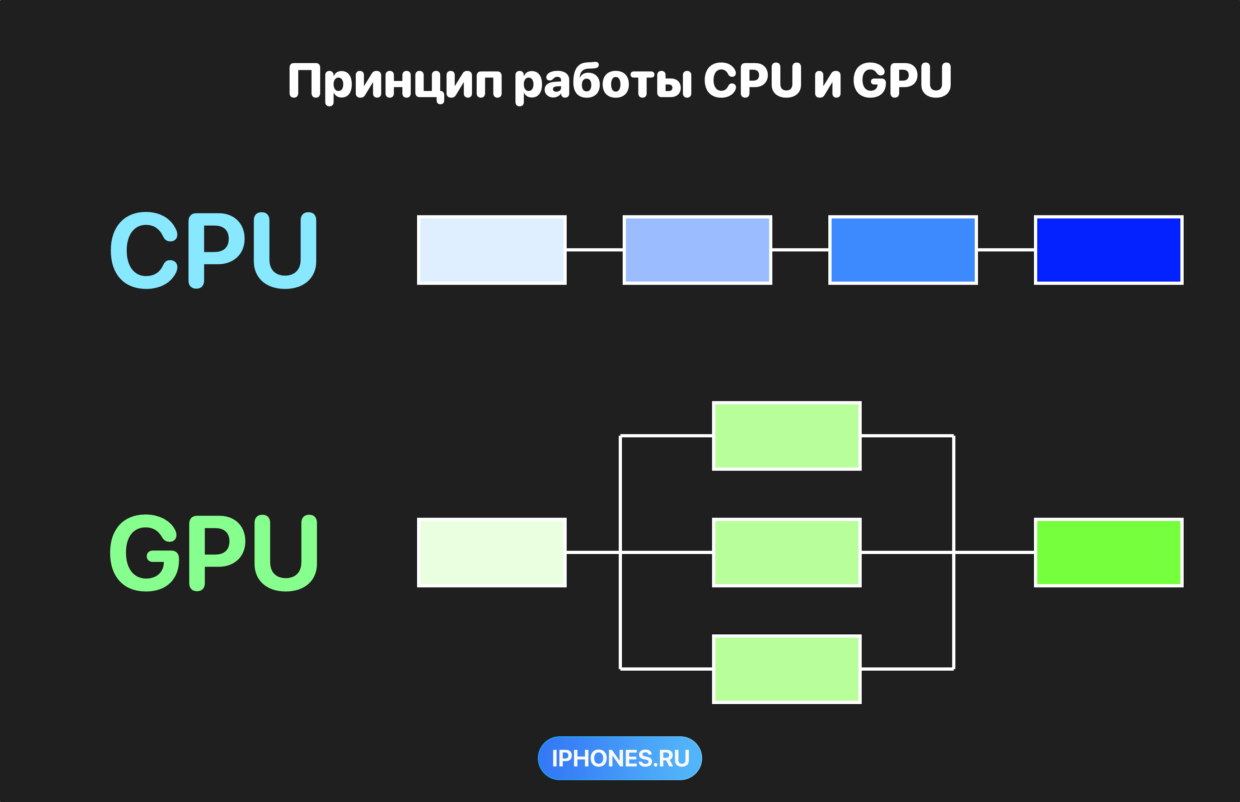

The GPU operation scheme is the same for all processors, even Apple. A large task is divided into identical small ones and calculated in parallel millions of times per second

The GPU graphics processor was created in order to calculate simple operations of the same type in parallel.

For example, in a 3D scene, the computer needs to correlate different points of objects in space relative to each other or calculate color parameters in RGB format for each pixel on your screen with predetermined parameters.

These are operations of one type, for which simple mini-calculators are responsible inside each core in the GPU.

Calculators are literal, identical and simple.

They perform multiplication, addition, and a couple of other simple operations. There are about four of these calculators of different types per core, which are called arithmetic logic units or ALU (Arithmetic Logic Units).

And the cores themselves are called the shader core or CUDA in NVIDIA video cards.

The term “shader core” comes from the English word “shade“, meaning “shadow“and performing shader functions in graphics.

Shaders determine how light interacts with the surface of objects. They change the visibility, color, and texture of objects depending on light, viewing angle, and other factors.

The more of these ALU calculators there are in one chip, the faster and more the GPU will be able to calculate objects in the scene.

The official documents show this entire system in the pictures below, but I’ve made clearer illustrations further down the line.

In addition, they are now adding to the GPU tensor, textured kernels and kernels for ray tracing.

Inside tensor cores, more complex matrix “calculators” are installed so that NVIDIA DLSS, AMD FidelityFX or Apple MetalFX AI scaling works.

Kernels for ray tracing calculate the trajectory of the vectors of light rays, taking into account their bounce from objects.

Read more about GPU components here.

All cores perform operations simultaneously, which is why they are so efficient. This is how graphics work in any modern NVIDIA, AMD and Apple platform.

In this case, it again seems as if Apple can do everything at once with one core. But by their very nature, GPU blocks cannot be similar to CPU cores in complexity, because otherwise they would be much slower.

Now let’s see what it looks like in practice – separately in the GPU from NVIDIA and from Apple.

GPU architecture from NVIDIA. Thousands of cores are easy to find

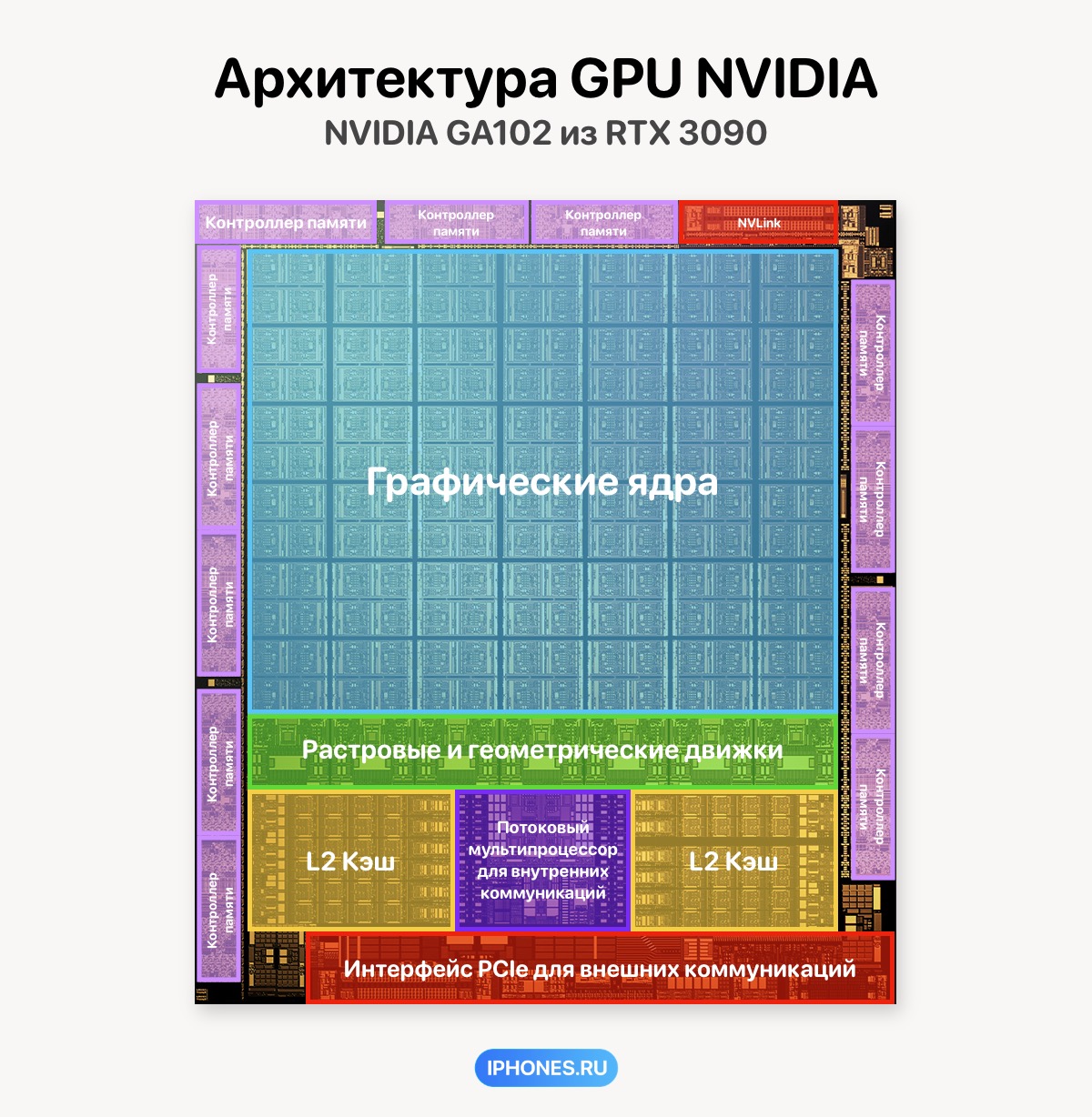

Let’s take the processor architecture as an example. GA102which is the base for all video cards with RTX 3080 to RTX 3090ti.

The chip consists of several blocks: cache, VRAM memory controllers, multiprocessor to connect all components and the most important graphic blockwhere all calculations take place.

︎ Because the GPU performs many tasks of the same type in parallel, the layout of its computing components within the graphics unit resembles a conveyor belt of 7 identical tracks that perform the same simple actions.

The lanes are called GPC (Graphics Processor Cluster).

︎ Each cluster consists of 12 streaming multiprocessors SM (Streaming Multiprocessor) and one raster-geometric engine.

︎ Each SM consists of four warps (Warp), four texture units and one ray tracing core.

︎ And finally, inside each warp there are 32 CUDA cores (shader) and 1 tensor core.

︎ Each CUDA core consists of basic arithmetic-logical units ALU, which perform the necessary graphics calculations.

A total of 10,752 CUDA cores, 336 tensor, 336 texture and 84 trace cores are obtained.

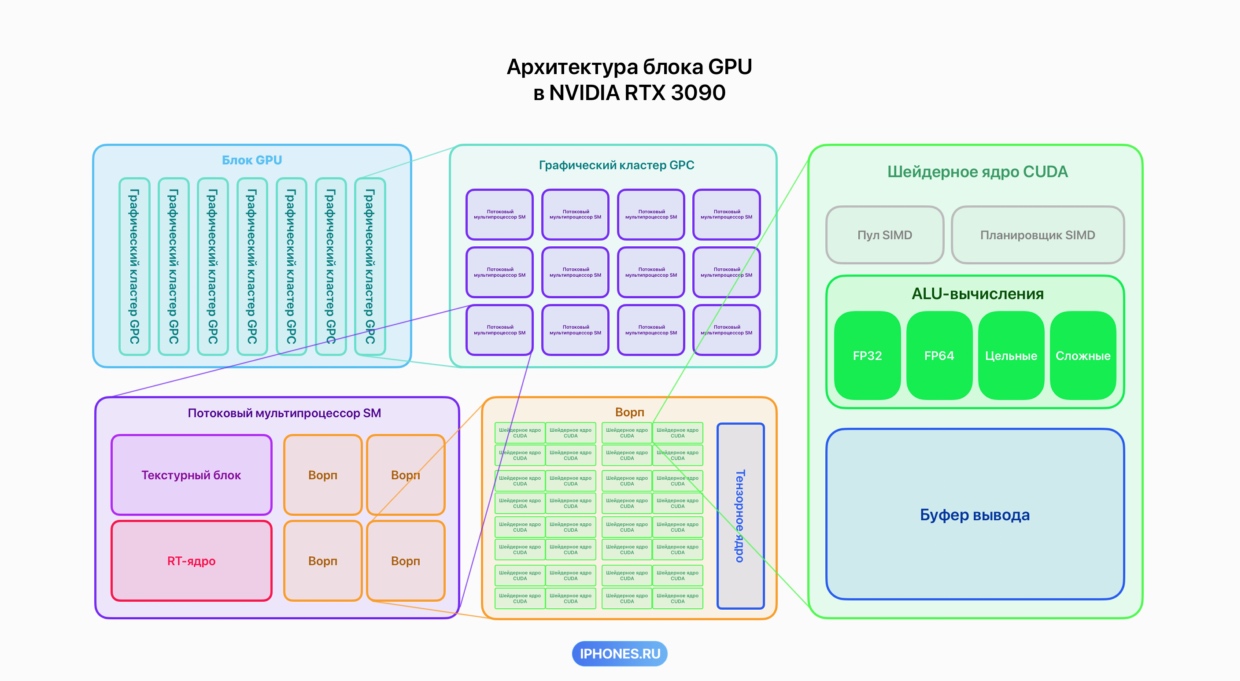

The general scheme of “wrapping” blocks into one another looks like this:

Computing block → 7 graphics clusters GPC → 12 streaming multiprocessors SM → 4 vorpa1 textured and 1 RT core → 32 shader cores and 1 tensor core.

Depending on the generation and level of the processor, the number and architecture of the cores themselves change, but the calculation criterion and the general structure have remained the same over the past 20 years.

NVIDIA and AMD call cores the most basic computing units that process data at the simplest level.

Now you will be surprised how similar Apple’s block hierarchy is to the example above.

Apple GPU architecture. Hundreds of small ones are hidden inside large nuclei.

Let’s take the 10-core GPU from M3 as an example. It has the same architecture as the A17 Pro from the iPhone 15 Pro, A18 and M4 series chips.

We are talking about several generations at once, because in them Apple uses an architecture called “Apple family 9“, that is, the ninth version of graphics that the company has developed for its processors and launched in 2023.

More power compared to the A17 Pro in different versions of the M3, M4 and A18 is achieved by scaling this architecture.

Since the M3 has the GPU and CPU on the same chip, some parts of the chip belong to both processors.

This includes general system cache, memory management units, multiprocessor for connecting components and itself graphic block from the so-called Apple cores.

︎ Like NVIDIA processors, the main graphics unit of the GPU in the M3 is divided into 10 graphics clustersthese are what Apple calls cores.

︎ Inside each core located 16 computing units (Compute Units, CU), an analogue of streaming multiprocessors in NVIDIA chips.

︎ Each CU block has 8 executive blocks (Execute Units, EU), cache unit and microprocessors for data management.

︎ Each EU block has one shader block (Shader Core, SC), which are analogous to CUDA cores, one RT core and one block for texture processing.

︎ Each shader block consists of several arithmetic logic units ALUpools for processing And planning SIMD instructions (Single Instruction, Multiple Data pool) and another memory block for processing current processes.

The general scheme of “wrapping” blocks into one another looks like this:

Computing block → 10 large nuclei → 16 computing units CU → 8 executive blocks EU → 1 shader1 textured and 1 tensor core.

And so the shader unit in this case is an analogue of the CUDA core, which NVIDIA usually considers in its processors.

Tensor There are no cores in the Apple GPU itself, but they are replaced by two types of specialized blocks on the M and A series chips themselves: these are AMX blocks and NPUs, designed to accelerate matrix calculations and operations with tensors.

Other differences: judging by according to Applein GPU M3 and newer there are as many traced RT cores and texture cores as there are shader cores. NVIDIA cards have an order of magnitude fewer of them than CUDA cores.

As a result, the M3 has 10 cores installed according to Apple’s logic and 1280 shader coresif we count according to the NVIDIA principle. If we compare this with entry-level cards, the numbers already converge.

In the end, it all depends on who counts the blocks in the GPU.

To figure out how Apple’s GPU cores are structured, I had to correlate the data several open sources only one of which was from Apple itself.

The company almost does not share the real technical characteristics of its processors, so if you still notice an error, do not hesitate to write in the comments.

Moreover, even with all the openness of NVIDIA, specialized sources may also miss details.

For example, in the previous article on the topic of GPUs, I did not point out that each of the seven primary SM streaming multiprocessors in the RTX 3080 has 4 texture units. I only found them this time.

At the same time, even such an abstract representation of the architecture allows us to see the difference in how Apple and NVIDIA designed their graphics cores.

For example, Apple has implemented texture units and ray tracing cores at the same level as the basic shader cores, meaning they are equal in number to each other.

And NVIDIA uses much more shader cores, because there are only four texture units per 32 shader cores, and there is only one ray tracing core.

But one conclusion still suggests itself.

GPUs in both Apple and NVIDIA chips are arranged hierarchically. In both cases, they are divided into blocks, each of which is divided into smaller blocks and so on into 4 steps of inward scaling.

The only difference is who at what level of the architecture calls the computing unit a “core”.

NVIDIA prefers to call the most basic units of computation kernels, while Apple prefers to call the largest clusters.

On both sides, the positions are clear. Thousands of cores make NVIDIA’s graphics cards look impressive, while Apple uses simpler numbers to make its lineup (and pricing) easier to understand.

So it turns out that everything once again comes down to the most ordinary marketing.

Nvidia introduced the RTX 5070, RTX 5080 and RTX 5090 video cards. They are 2 times faster than the previous generation

Source: www.iphones.ru